September 16th, 2024

TLDR;

The Document AI service unintentionally allows users to read any Cloud Storage object in the same project.

- Document AI Service Misconfiguration: The Document AI service agent is auto-assigned with excessive permissions, allowing it to access all objects from Cloud Storage buckets in the same project.

- Data Exfiltration Risk: Malicious actors can exploit this to exfiltrate data from Cloud Storage by indirectly leveraging the service agent's permissions.

- Transitive Access Abuse: This vulnerability is an instance of transitive access abuse, a class of security flaw where unauthorized access is gained indirectly through a trusted intermediary.

- Impact to Google Cloud Customers: With this abuse case, threats move beyond first-tier “risky permissions” to include a spiderweb of undocumented transitive relationships.

Service Background:

Document AI is a Google Cloud service that extracts information from unstructured documents. It offers both pre-trained models and a platform for creating custom models. As part of Vertex AI, Document AI integrates with other AI services to serve and share models.

Document AI employs a Google-managed service account, often called a Service Agent, assigned the Role documentaicore.serviceAgent when batch processing documents. This account handles data ingestion, transformation, and output using its broad Cloud Storage permissions. This approach reduces the friction for the end user by automating identity creation and auto-assigning permissions compared to the alternative of using a customer-managed service account for execution, which would require explicit configuration and manual role assignment.

Vulnerability Description

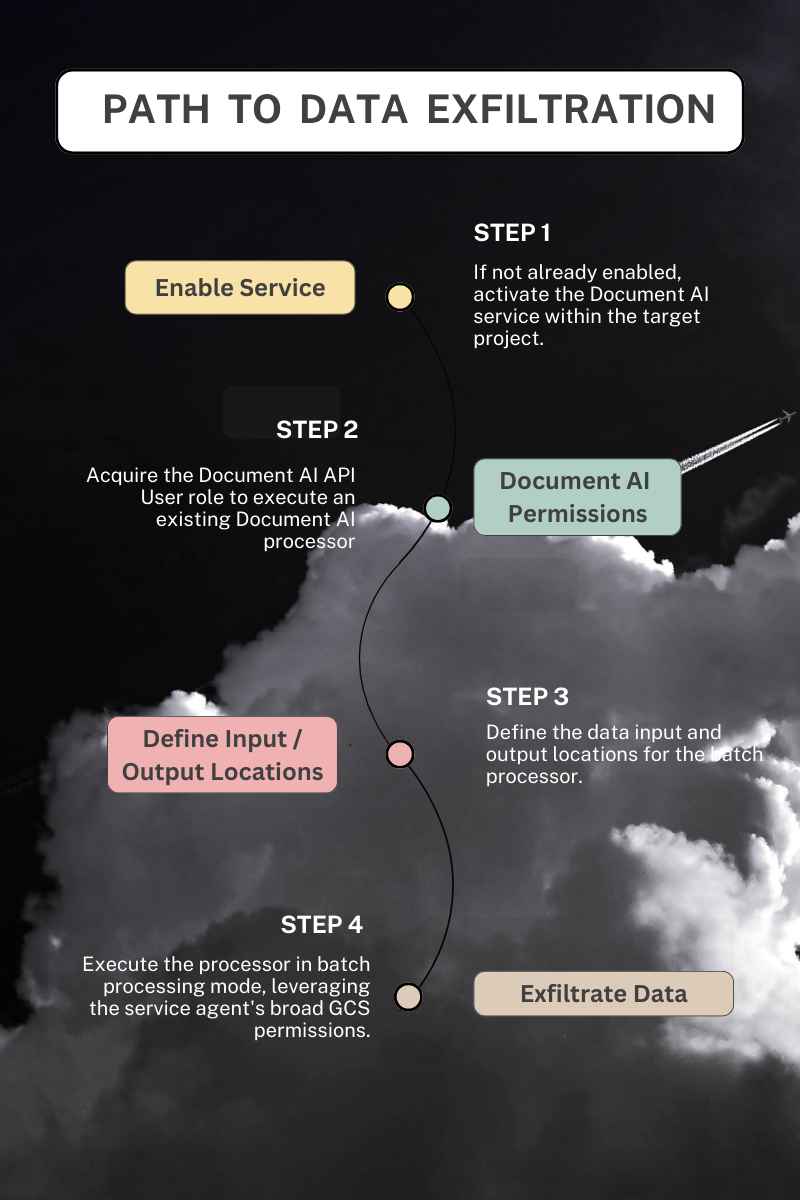

Document AI allows users to process documents stored in Cloud Storage by creating both online (standard) jobs and offline (batch) processing jobs. The service utilizes the Document AI Core Service Agent with the Role documentaicore.serviceAgent to handle data ingestion and output the results when performing batch processing. Critically, this service agent possesses broad permissions to access any Cloud Storage bucket within the same project.

Unlike online or standard processing, where the initial caller to Document AI is the principal used to retrieve GCS objects, in batch processing mode, retrieval of any input data and writing the results to a GCS bucket is executed under the context of the Document AI Core Service Agent, using its pre-assigned permissions. Because the Service Agent is used as the identity in batch processing, the initial caller's permission limitations are not respected, allowing for data exfiltration.

The Document AI service takes in a user-defined input location to read pre-processed data and an output location to write its results. This capability enables a malicious actor to exfiltrate data from GCS to an arbitrary Cloud Storage bucket, bypassing access controls and exfiltrating sensitive information.

Leveraging the service (and its identity) to exfiltrate data constitutes transitive access abuse, bypassing expected access controls and compromising data confidentiality.

Prerequisites

- Existing Document AI Processor

Suppose a processor exists in a target project. In that case, a malicious actor needs only the documentai.processors.processBatch or the documentai.processorVersions.processBatch permission to use the processor, read data from any Cloud Storage bucket in the project, and exfiltrate the objects to an arbitrary bucket.

- Creating or Updating a Processor

If no processor exists in a target project, a malicious actor would additionally need the documentai.processors.create permission to create one suitable for reading from a Cloud Storage bucket and write the output to another. Alternatively, a processor could be altered with the documentai.processors.update permission to allow for ingest via a GCS bucket.

- Document AI Not Previously Used

If DocumentAI has not been used in a project, an attacker must enable the service before creating or using a processor. Enabling new services in GCP Projects requires the serviceusage.services.enable Cloud IAM permission and is included in over 25 pre-defined Roles along with the coarse-grained Roles Editor and Owner. When enabling the DocumentAI service, the associated Service Agent and its auto-assigned Project-level Role are created, requiring no *.setIamPolicy permissions from the service enabler.

Proof of Concept

Four Terraform modules are provided to demonstrate data exfiltration through Document AI. Two modules exploit the processors.processBatch and processorVersions.processBatch methods, respectively, leveraging the service agent's broad permissions to copy data from an input Cloud Storage bucket to a user-specified output bucket. In contrast, the remaining two modules showcase the standard online modes, processors.process, and processorVersions.process, which retrieve input data under the context of the original caller, adhering to expected access controls. The batch process methods effectively bypass these controls by running the job as the DocumentAI Service Agent.

Google’s Response

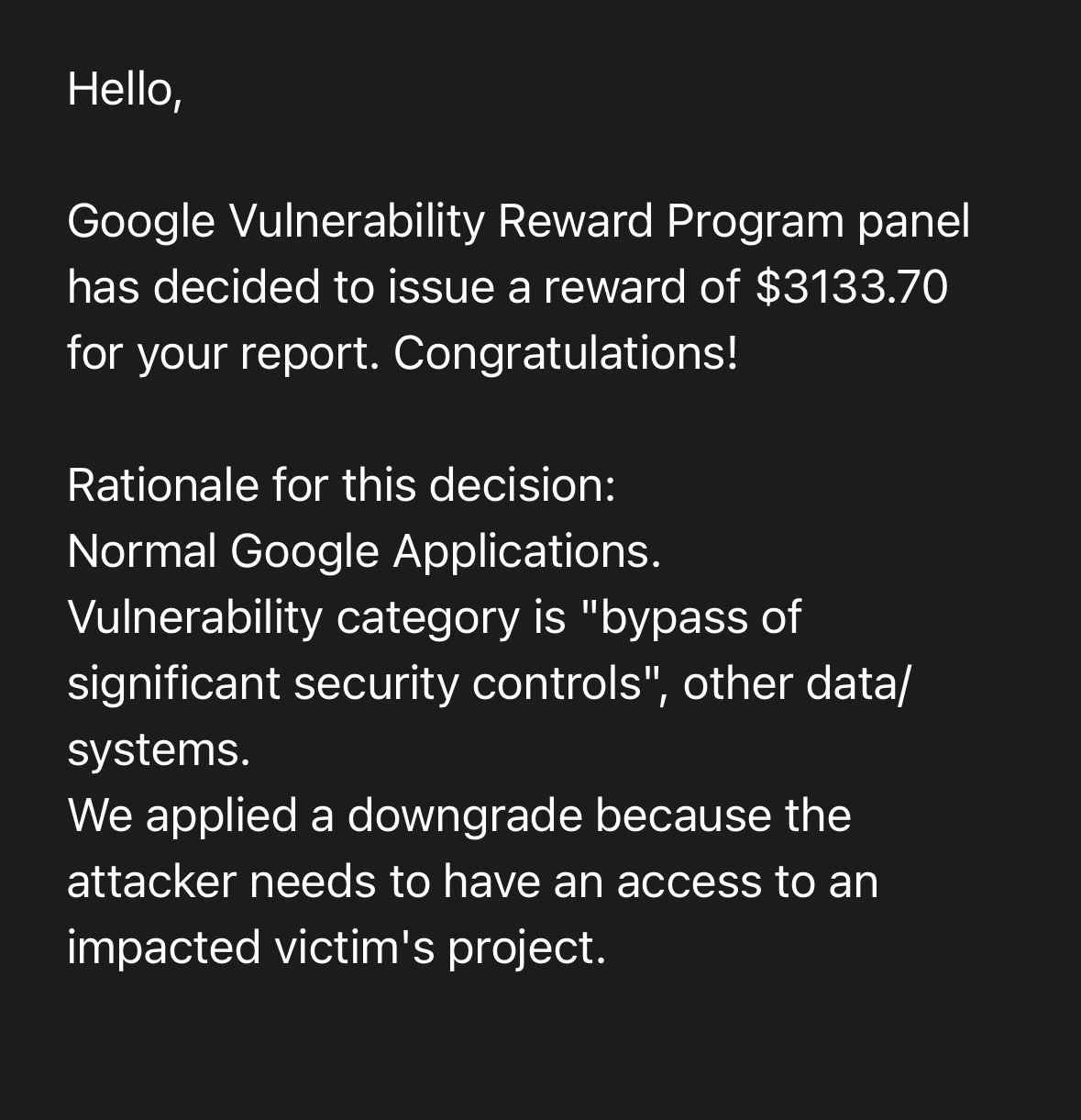

This instance of transitive access abuse was reported to Google through their Vulnerability Reward Program on April 4th, 2024. After several months of researcher efforts to identify the root cause of the issue and propose a solution, Google has accepted the report as a vulnerability, classifying as a category S2C "bypass of significant security controls", other data/systems. They were informed of the planned public disclosure on July 2nd.

A full accounting of the reporting timeline can be viewed below however, noteworthy are the following comments/actions:

- Google determined “.....the issue to be due to insufficient or incorrect documentation.”

- The report triaged through their Vulnerability Reward Program (VRP) status was changed to “Fixed” despite the issue persisting.

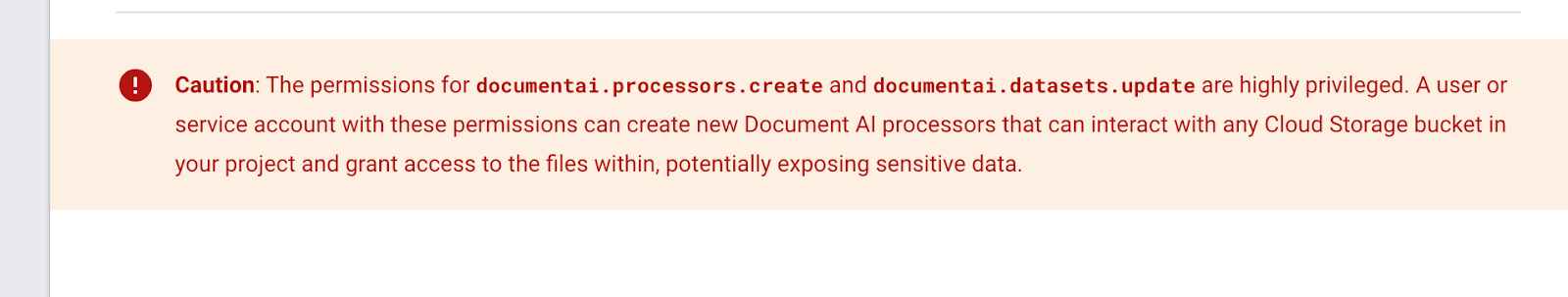

- A Caution box was added to the DocumentAI IAM Roles documentation page, warning customers that “The permissions for documentai.processors.create and documentai.datasets.update are highly privileged”.

- The following warning box was added sometime after the previous Internet Archive snapshot on Dec 9th, 2023. I have no way of knowing if it was added in response to my report

- Google reversed its decision to withhold a bounty, citing "insufficient or incorrect documentation." as the root cause. The bug submission has now been classified as a "bypass of significant security controls" and awarded a bounty. Still no indication of when or how the abuse case will be fixed.

Recommendations for Google

The Document AI Service Agent should not be automatically assigned broad, Project-Level permissions. While utilizing a Google-managed service account offers operational benefits, granting it unrestricted Cloud Storage access in conjunction with user-defined input/output locations introduces a significant security risk through transitive access abuse. The service may be working as intended but not as expected.

Impact to Google Cloud Customers

All Google Cloud customers are affected by this vulnerability if they do not prevent the enablement of the DocumentAI service and its usage via Organizational Policy Constraints listed below. A customer does not currently need to be using DocumentAI to be affected. Simply the ability for an attacker to enable the service puts critical data at risk for exfiltration.

When IAM permissions have knock-on effects to one another, answering the question ‘What could go wrong’ with any given service becomes impossible. It's unclear what Google's next steps are for protecting their customers, whether they intend to offer an Organizational Policy constraint or will remove this transitive access abuse case all together.

Mitigations

Unfortunately, the report submitted through Google’s Vulnerability Reward Program (VRP) has not result in any changes to the service despite best efforts. Migrations mentioned below do not address the underlying vulnerability but only reduce a customer’s potential impact.

1. Project-level Segmentation: Document AI should be used in a segmented and isolated project. Do not co-mingle the Document AI service in a project containing sensitive data. When using any SaaS or ETL service, configure the inputs and outputs locations cross-project. This will force the manual binding of IAM permissions for any Service Agent rather than relying on automatic grants.

2. Restrict the API and Service: Use the Org Policy Constraint serviceuser.services to prevent the enablement of the Document AI service when it's not needed and restrict the API usage with the Org Policy Constraint serviceuser.restrictServiceUsage.

Conclusions

Role and permission grants only tell part of the story, especially once service functionality and the possibility of transitive access are considered. Transitive access abuse is likely not isolated to the Document AI service; it will likely reoccur across services (and all the major cloud providers) as misunderstandings of the threat models persist.

Segmenting data storage, business logic, and workloads in different projects can reduce the blast radius of excessively privileged service agents but customers rely on cloud providers to ensure they are not building privilege escalation pathways in their products and IAM schemes.

Reporting Timeline and Response

- April 4th 2024: Initial Report: Data exfiltration via Document AI Data Processing, Issue 332943600

- [Google VRP]: “Hi! Many thanks for sharing your report. This email confirms we've received your message. We'll investigate the issue you've reported and get back to you once we have an update. In the meantime, you might want to take a look at the list of frequently asked questions about Google Bug Hunters.

- April 8th 2024: Priority changed P4 -> P3 and Status changed New -> Assigned

- [Google VRP]: “We just want to let you know that your report was triaged and we're currently looking into it.”

- April 9th 2024: Type changes Customer Issue -> Bug; Severity changed from S4 -> S2; Status changed from Assigned -> In Progress (accepted)

- [Google VRP]: “Thanks again for your report. I've filed a bug with the responsible product team based on your report. The product team will evaluate your report and decide if a fix is required. We'll let you know if the issue was fixed. Regarding our Vulnerability Reward Program: At first glance, it seems this issue is not severe enough to qualify for a reward. However, the VRP panel will take a closer look at the issue at their next meeting. We'll update you once we've come to a decision. If you don't hear back from us in 2-3 weeks or have additional information about the vulnerability, let us know! “

- April 9th 2024 - [Kat Traxler]: "As always, thank you for the quick triage. If I can help at all in describing the risk of the current configuration, please don't hesitate to reach out. Thanks, Kat”

- April 30th 2024 - [Kat Traxler]: “Hello, Bringing this to the top of your inbox. Inquiring if this has been triaged as an abuse risk? or if any update to the service is forthcoming. Thanks, Kat”

- May 2nd 2024 - [Google VRP]: "Hi! The panel members have not yet come to a decision on this report; the panel is meeting twice a week, and your report is taken into account at every meeting. Sorry for the delay and many thanks for your patience. You'll receive an automated email once a decision has been made.”

- May 2nd 2024 - [Kat Traxler]: "Thank you for the update. If I don't hear anything in ~4 weeks or so I will ping again”

- May 7th 2024 - [Google VRP]: “** NOTE: This is an automatically generated email **Hello, Google Vulnerability Reward Program panel has decided that the security impact of this issue does not meet the bar for a financial reward. However, we would like to acknowledge your contribution to Google security on our Honorable Mentions page at https://bughunters.google.com/leaderboard/honorable-mentions. If you wish to be added to it, please create a profile at https://bughunters.google.com, if you haven't already done so. Rationale for this decision: We determined the issue to be due to insufficient or incorrect documentation. Please note that the fact that this issue is not being rewarded does not mean that the product team won't fix the issue. We have filed a bug with the product team. They will review your report and decide if a fix is required. We'll let you know if the issue was fixed. Regards, Google Security Bot”

- May 7th 2024: - [Kat Traxler]: “Thanks for the response!”

- June 22nd 2024: Status changed to "Fixed"

- [Google VRP]: "Our systems show that all the bugs we created based on your report have been fixed by the product team. Feel free to check and let us know if it looks OK on your end. Thanks again for all your help!”

- June 25th 2024 - [Kat Traxler]: “Hello. I am finding that this issue still persists. Documents and training data can be exported using the permissions of the Document AI Core Service Agent, allowing a user who does not have access to storage the ability to exfiltrate data. Note this capability exists in the methods: google.cloud.documentai.uiv1beta3.DocumentService.ExportDocuments and in the batch processing capabilities: (processors.batchProcess & processorVersions.batchProcess & processors.batchProcess) Let me know if you need any further POCs”

- June 26th 2024 - [Google VRP]: “Hi! Thanks for your response, we've updated the internal bug for the team working on the issue.”

- July 2nd 2024 - [Kat Traxler]: "Hello. Given the recent 'false alarm' that this issue was fixed, I began worrying that I hadn't communicated the risk and impact appropriately. Therefore, I created a TF deployment and recorded a POC for you and the service team to watch. The TF deployment can be found at: https://github.com/KatTraxler/document-ai-samples POC video at this drive link. The point that needs to be hammered home is the principal who can process (or batch process) documents with Document AI does not need to have Storage permissions to access data in Cloud Storage and move to another location (data exfiltration) This is achieved due to the permissions assigned to the Document AI P4SA. (roles/documentaicore.serviceAgent). I recommend that Document AI be assigned a user-manage service account for its data processing, similar to Cloud Workflows. Allowing the P4SA to move user-defined data is not the correct pattern and has led to a data exfiltration vulnerability. Please change the status of this issue to indicate it has not been fixed. Public disclosure will occur at a high-profile event in September 2024”

- July 4th 2024 - [Google VRP]: "Hi! Thank you for more information about the case, we will ping the product team. And, thanks for the heads up regarding the disclosure of your report. Please read our stance on coordinated disclosure. In essence, our pledge to you is to respond promptly and fix vulnerabilities in a sensible timeframe – and in exchange, we ask for a reasonable advance notice.”

- July 29th 2024 - [Kat Traxler]: “Hi Team. Giving you all another heads up that I will be speaking about the risk for data exfiltration via the DocumentAI service at fwd:CloudsecEU on September 17th: https://pretalx.com/fwd-cloudsec-europe-2024/talk/BTT9LJ/ With an accompanying blog to be published the day before, on September 16th. Thanks, Kat”

- July 30th 2024 - [Google VRP]: “Hi. Thank you very much for the heads up!”

- August 5th 2024 - [Kat Traxler]: "Thank you. May I suggest changing the status of this report away from "Fixed"? Since it is not fixed, Thanks again. Kat”

- August 12th 2024 - [Google VRP]: “Hi. Would it be possible to share a draft of the presentation and/or blog post with us before the disclosure? Thank you”

- August 12th 2024: Comment from Kat Traxler: “I’m more than happy to. I'm especially interested in your feedback for accuracy and coordinating notice. I'll have the blog post ready by August 26th”

- August 13th 2024 - [Google VRP]: "HI! Thank you!”

- August 21st 2024 - [Google VRP]: “Hi Kat, Thanks again for your report and for your patience! The team just sat down and discussed your submission as a team in much more detail. We decided to file a bug with the product team after deliberating whether this was WAI or a vulnerability. We did this because the behavior isn't clear from your perspective but the product team is best positioned to determine whether something is WAI or not. The internal bug was actually reopened on July 9th based on your comment and we will work with the product team to make this determination. We'll reopen issue 332943600 as well to reflect that now - this should have happened in July, sorry! Again, thank you for reaching out and for your report!

The Google Bug Hunter Team” - August 22nd 2024 - [Kat Traxler]: “Thanks for the update and the status change. Are you able to share your definitions of WAI and a vulnerability? To me, an issue can be both working as intended (WAI) and have significant negative security impact. Thanks. Kat"

- September 9th 2024 - [Google VRP]: “Google Vulnerability Reward Program panel has decided to issue a reward of $3133.70 for your report. Congratulations! Rationale for this decision: Normal Google Applications. Vulnerability category is "bypass of significant security controls", other data/systems.We applied a downgrade because the attacker needs to have an access to an impacted victim's project.”

Related Research

For more information on GCP Service Agents and their threat models, please see the GCP IAM 201 series covering these topics: